Pairing headlines with credibility alerts from fact-checkers, the public, news media, and even artificial intelligence, can reduce peoples’ intention to share misinformation on social media, researchers report.

The dissemination of fake news on social media is a pernicious trend with dire implications for the 2020 presidential election.

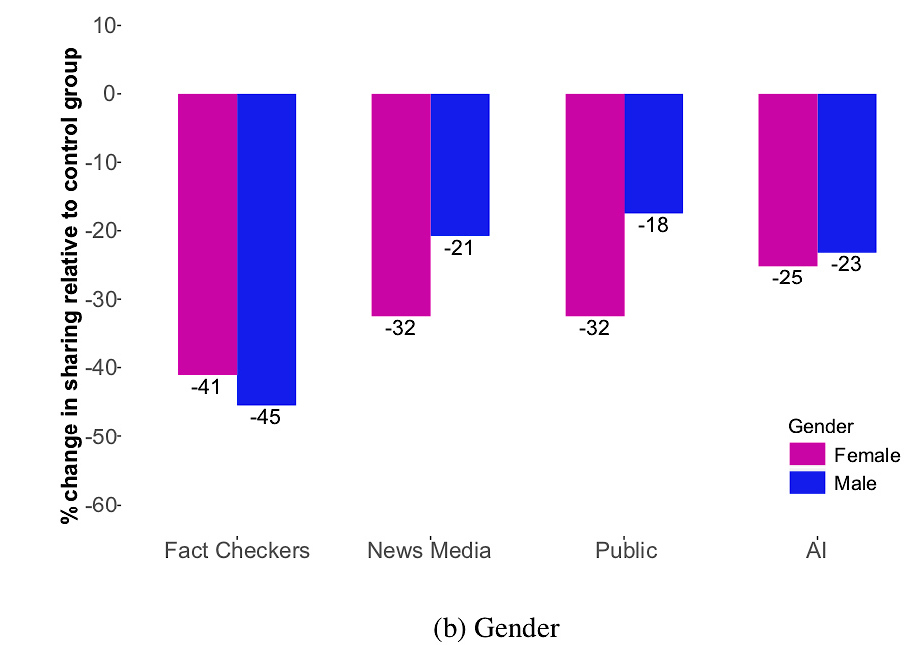

Credibility indicators are less likely to influence men, who are more inclined to share fake news on social media.

Indeed, research shows that public engagement with spurious news is greater than with legitimate news from mainstream sources, making social media a powerful channel for propaganda.

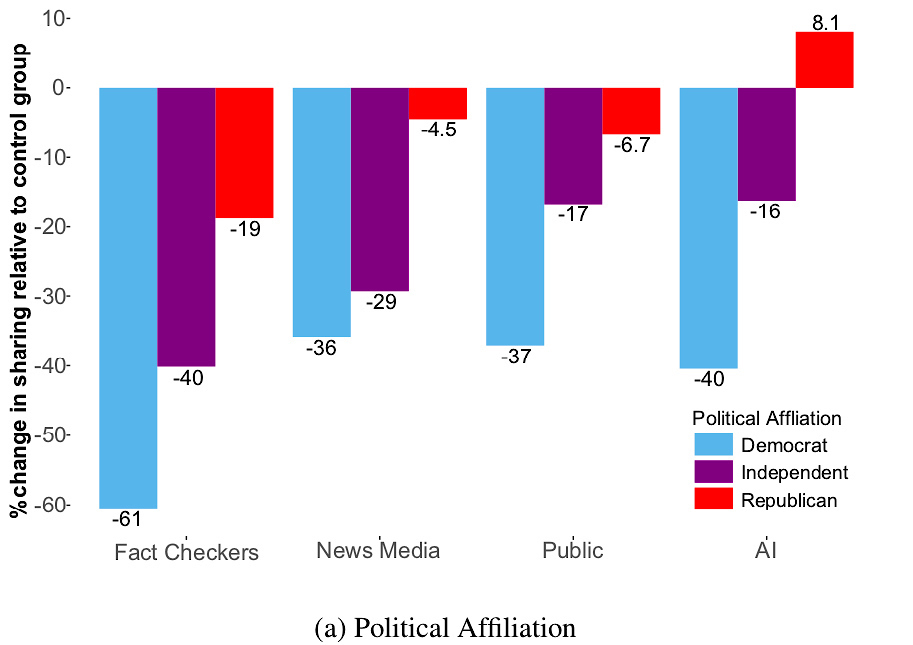

The new study also shows the effectiveness of alerts about misinformation varies with political orientation and gender.

The good news for truth seekers? People overwhelmingly trust official fact-checking sources.

The study, led by Nasir Memon, professor of computer science and engineering at the New York University Tandon School of Engineering, and Sameer Patil, visiting research professor at NYU Tandon and assistant professor in the Luddy School of Informatics, Computing, and Engineering at Indiana University Bloomington, goes further, examining the effectiveness of a specific set of inaccuracy notifications designed to alert readers to news headlines that are inaccurate or untrue.

Warnings about misinformation on social media

The work involved an online study of around 1,500 individuals to measure the effectiveness among different groups of four so-called “credibility indicators” displayed beneath headlines:

- Fact Checkers: “Multiple fact-checking journalists dispute the credibility of this news”

- News Media: “Major news outlets dispute the credibility of this news”

- Public: “A majority of Americans disputes the credibility of this news”

- AI: “Computer algorithms using AI dispute the credibility of this news”

“We wanted to discover whether social media users were less apt to share fake news when it was accompanied by one of these indicators and whether different types of credibility indicators exhibit different levels of influence on people’s sharing intent,” says Memon. “But we also wanted to measure the extent to which demographic and contextual factors like age, gender, and political affiliation impact the effectiveness of these indicators.”

Participants—over 1,500 US residents—saw a sequence of 12 true, false, or satirical news headlines. Only the false or satirical headlines included a credibility indicator below the headline in red font. For all of the headlines, researchers asked respondents if they would share the corresponding article with friends on social media, and why.

“Upon initial inspection, we found that political ideology and affiliation were highly correlated to responses and that the strength of individuals’ political alignments made no difference, whether Republican or Democrat,” says Memon. “The indicators impacted everyone regardless of political orientation, but the impact on Democrats was much larger compared to the other two groups.”

The most effective of the credibility indicators, by far, was Fact Checkers: Study respondents intended to share 43% fewer non-true headlines with this indicator versus 25%, 22%, and 22% for the “News Media,” “Public,” and “AI” indicators, respectively.

How politics affects things

The team found a strong correlation between political affiliation and the propensity of each of the credibility indicators to influence intention to share.

In fact, the AI credibility indicator actually induced Republicans to increase their intention to share non-true news:

- Democrats intended to share 61% fewer non-true headlines with the Fact Checkers indicator (versus 40% for Independents and 19% for Republicans).

- Democrats intended to share 36% fewer non-true headlines with the News Media indicator (versus 29% for Independents and 4.5% for Republicans).

- Democrats intended to share 37% fewer non-true headlines with the Public indicator, (versus 17% for Independents and 6.7% for Republicans).

- Democrats intended to share 40% fewer non-true headlines with the AI indicator (versus 16% for Independents).

- Republicans intended to share 8.1% more non-true news with the AI indicator.

Patil says that, while fact-checkers are the most effective kind of indicator, regardless of political affiliation and gender, fact-checking is a very labor-intensive. He says the team was surprised by the fact that Republicans were more inclined to share news that was flagged as not credible using the AI indicator.

“We were not expecting that, although conservatives may tend to trust more traditional means of flagging the veracity of news,” he says, adding that the team will next examine how to make the most effective credibility indicator—fact-checkers—efficient enough to handle the scale inherent in today’s news climate.

“This could include applying fact checks to only the most-needed content, which might involve applying natural language algorithms. So, it is a question, broadly speaking, of how humans and AI could co-exist,” he explains.

The team also found that males intended to share non-true headlines one and half times more than females, with the differences largest for the public and news media indicators.

Credibility indicators are less likely to influence men, who are more inclined to share fake news on social media. But indicators, especially those from fact-checkers, reduce intention to share fake news across the board.

Socializing was the dominant reason respondents gave for intending to share a headline, with the top-reported reason for intending to share fake stories being that they were considered funny.

The research appears in ACM Digital Library.

Source: NYU

The post Putting ‘red flags’ on misinformation may cut sharing appeared first on Futurity.

from Futurity https://ift.tt/3d17vR1

No comments:

Post a Comment